View Server

View Server (VS) is a comprehensive software system designed for the management of earth observation data and metadata in the cloud. It is specifically tailored to handle various tasks related to earth observation, providing a range of services and components for efficient data management. This monorepo contains all the necessary and optional components and the helm deployment chart of View Server.

For more detailed information check out the documentation: https://vs.pages.eox.at/documentation/

Components

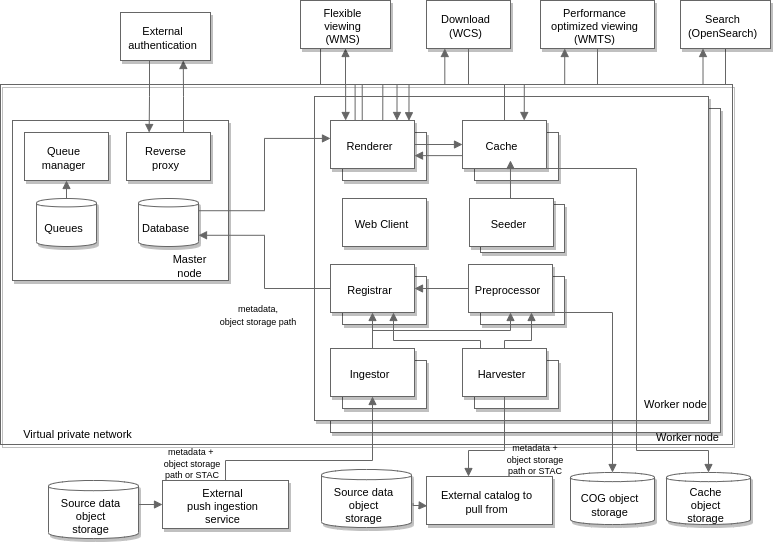

The View Server (VS) software system is composed of the following components:

Database - Utilizes PostgreSQL and SpatiaLite to store relevant data. It serves as the primary data storage and retrieval system for the View Server.

Message Queue - Utilizes Redis for communication between various data services within the View Server system. It facilitates efficient and reliable message passing between components.

Renderer - Powered by EOXServer, the Renderer component provides OGC (Open Geospatial Consortium) services for rendering and serving geospatial data to clients. It enables the visualization and analysis of Earth Observation data.

Registrar - Also utilizing EOXServer, the Registrar component is responsible for loading data into the View Server system. It manages the ingestion and registration of new datasets, ensuring their availability for processing and visualization.

Cache - Relies on MapCache for image caching purposes. It enhances the performance of the system by storing pre-rendered images, reducing the need for repetitive rendering operations.

Harvester - Extracts metadata from various services and sources, allowing the View Server to gather relevant information about available datasets. The Harvester component automates the process of acquiring metadata, enabling efficient data discovery within the system.

Scheduler - Schedules other data services, allowing further automation on a predefined schedule. Most commonly it schedules the Harvester component to trigger periodic extraction.

Preprocessor - Transforms data for optimal viewing and analysis within the View Server. Also transforms metadata if necessary. This component performs various preprocessing tasks such as data transformation, format conversion, and data enhancement, ensuring data compatibility and usability.

Client - The front-end component of the View Server system. It provides the user interface through which users can interact with and visualize Earth Observation data. The Client component offers a rich set of features and tools for data exploration and analysis.

Seeder - Facilitates bulk caching of data. The Seeder component enables efficient pre-caching of datasets, ensuring fast and responsive access to frequently accessed data within the system.

Usage

View Server is designed to cater to two main user roles: operators and users.

Operators

Operators are responsible for the installation, maintenance, and management of the View Server system within a cloud environment. Their primary tasks include:

Installation: Operators are tasked with deploying and configuring the View Server system in the desired cloud environment. This involves setting up the necessary infrastructure, provisioning resources, and ensuring proper connectivity.

Data Management: Operators are responsible for maintaining and managing the data within the View Server system. This includes tasks such as ingesting new datasets, updating existing data, and managing data pipelines for efficient processing and storage.

System Health and Uptime: Operators play a crucial role in monitoring and ensuring the overall health and uptime of the View Server system. They monitor system performance, handle any issues or errors that may arise, and take proactive measures to optimize system performance and reliability.

Users

Users of View Server consume the various OGC services provided by the system. They can interact with the system in one of the following ways:

Own Client: Users have the option to use their own client applications to access and consume the OGC services provided by View Server. They can integrate these services into their existing workflows or applications to leverage the capabilities of the system.

Bundled Client: Alternatively, users can utilize the bundled client provided with View Server. The bundled client offers a user-friendly interface for visualizing and exploring Earth Observation data. Users can utilize the features and tools within the client to analyze, query, and visualize the available datasets.

Whether users choose to use their own client or the bundled client, they can take advantage of the wide range of OGC services offered by View Server to access and utilize Earth Observation data effectively.

Installation

All the commands must be ran from the repo folder. Must have kubectl and helm utilities and one of k3s or minikube clusters.

Useful commands:

helm dependency update # updates the dependencies

helm template testing . --output-dir ../tmp/ -f values.custom.yaml # renders the helm template files. Used in conjunction with vs-starter

The template command outputs the rendered yaml files for debugging and checking.

Installing chart locally

In order to test the services together, here’s a way to install the chart locally in your k3s/minicube. This install is based on the default values in the repo and customizes them.

Prerequisites

When running k3s and minikube in docker, the paths doesn’t refer to your machine, but the docker container where k3s runs. The following display how to setup each solution.

Minikube

Minikube needs to be started with the following:

minikube start --mount-string /home/$user/:/minikube-host/

minikube addons enable ingress

Here the full home directory is bound to the cluster at /minikube-host in order to bind mounts necessary for development. Also the ingress addon is enabled.

k3s

k3s is also started creating with specifying a volume

k3s cluster create --volume /home/$user/:/k3s-host/

Here the full home directory is bound to the cluster at /k3s-host in order to bind mounts necessary for development.

values.yaml

The default values.yaml should be enough for a very basic setup, but overriding with a separate one is required for more elaborate setups and deployments.

Persistent volume claims

For the services to start, you’re going to apply persistent volume claims. You may use the pvc provided in tests.

Run kubectl apply -f tests/pvc.yaml to create PVCS:

data-access-redisdata-access-db

Deploying the stack

Install:

helm install test . --values values.custom.yaml

Upgrade with overrides values:

helm upgrade test . --values values.custom.yaml --values your-values-override-file.yaml

If you specify multiple values files, helm will merge them together. You can even delete keys by setting them to null in the override file a .

dev domain name:

To use the default domain name http://dev.local, you should add the domain name to your hosts e.g ( in linux add to etc/hosts):

<IP-ADDRESS> vs.local

Where <IP-ADDRESS> is the output of minikube ip, then you can simply navigate to http://dev.local to access the client

You might also want to change the number of replicas:

renderer:

replicaCount: 1

For development, it’s useful to mount the code as volume. This can be done via a values override.

When using k3s & minikube, it’s enough to define a volume like this:

registrar:

volumes:

- name: eoxserver

hostPath:

path: /<app>-host/path/to/eoxserver

type: DirectoryOrCreate

volumeMounts:

- mountPath: /usr/local/lib/python3.8/dist-packages/eoxserver/

name: eoxserver

where app is k3s or minikube and a volumeMount for the container:

The current deployment is configured to use a directory local storage called data, which should be mounted when creating the cluster. The folder contains a number of products, for testing/ registering

you should change the mounting path for the registrar and the renderer by replacing the host path for mounted volumes, for example ( for the registrar):

registrar:

volumes:

- name: local-storage

hostPath:

path: /<app>-host/path/to/vs-deployment/data

type: Directory

volumeMounts:

- mountPath: /mnt/data

name: local-storage

Where <app> is either k3s or minikube

preprocessing products:

For preprocessing the provided products in testing/preprocessed_list.csv which exists on swift bucket, you need to specify an output bucket for the preprocessing result.

You can set an arbitrary bucket -to be removed afterwards-

helm upgrade test . --values values.custom.yaml --set global.storage.target.container=<containerID>

kubectl exec deployment/test-preprocessor -- preprocessor preprocess --config-file /config.yaml "RS02_SAR_QF_SLC_20140518T050904_20140518T050909_TRS_33537_0000.tar"

P.S: In case no container was specified preprocessor will create a container with the item name

registering products

you can register products by executing commands either directly through the registrar or through the redis component (e.g use the stac item inside testing/product_list.json):

registrar:

kubectl exec deployment/test-registrar -- registrar --config-file /config.yaml register items '<stac_item>'

redis:

kubectl exec test-redis-master-0 -- redis-cli lpush register_queue '<stac_item>'

Demo deployment

There is a set of sample data in the tests/data directory, the list of the stac items are stored in tests/demo_product_list.json. The configuration of the sample data is included in the tests/values-testing.yaml config file.

Contributing

Interested in contributing to the View Server? Thanks so much for your interest! We are always looking for improvements to the project and contributions from open-source developers are greatly appreciated.

If you want to contribute code, get in touch via email office@eox.at with a brief outline of a planned fix or feature and we shall setup an account for you to create a Merge Request.

Tagging

This repository uses bump2version for managing tags. To bump a version use

bump2version <major|minor|patch> # or bump2version --new-version <new_version>

git push && git push --tags

Pushing a tag in the repository automatically creates:

versioned docker images for each service

a new version of VS helm chart

Versioning and branches

View Server adheres to Semantic Versioning and follows these rules:

Given a version number MAJOR.MINOR.PATCH, we increment the:

MAJOR version when we make incompatible API changes

MINOR version when we add functionality in a backward compatible manner

PATCH version when we make backward compatible bug fixes

Active development is followed by the main branch. New features or maintenance commits should be done against this branch in the form of a Merge Request of a Feature branch.

Current stable version of View Server is in branch 4.1. This branch should receive only bug fixes in the form of a Merge Request.

Bug fix branches should be originating from a stable branch. They should be merged to the respective stable branch, every other applicable stable branches and the main branch if possible.

Any breaking changes in any individual service or chart need to have a corresponding migration plan documented in BREAKING.md.

Development

You can get far with setting up a development environment locally. However for some multi component tasks, requiring debugging on database/redis it may pay off to spin up a cluster or use devcontainers.

Local

To setup a component for local development run the following in your virtual env.

cd < component-to-develop >

pip install -r requirements.txt --extra-index-url https://gitlab.eox.at/api/v4/projects/315/packages/pypi/simple

pip install -r requirements-dev.txt

pip install -r requirements-test.txt

pip install -e .

Flake8, mypy and black can be ran as commands from terminal:

flake8 # at the root folder

mypy . # at the root folder

black file.py # to autoformat a file. also possible with a path

These tools can also be integrated into your IDE/Editor.